In November 2019, Adrian Raper described in a blog post how the Language Centre of Universitas Indonesia (LBI) had decided to experiment with digital placement testing, using the Dynamic Placement Test. The motivation was twofold: that a digital test “is more relevant to the younger generation”; and, with almost uncanny foresight, because “an online placement test is more flexible and convenient because students do not need to be on campus to take the test. They can do the test […] at home.”

With lockdown, the second motivation was swiftly proved to be valid. How about the first? We have run two tests since the initial testing session in November, both of which included a short survey at the end for test takers to share their experience. The first session was run between 6 May and 22 June with 506 test takers, 66 of which responded to the survey. The most recent session was run between 12 August and 26 September with 641 test takers, 82 of which responded to the survey. It is a little low, but it is still a reasonable sample — more than we could have collected had we interviewed them in person, as originally intended.

The first three questions focused on comparing the new digital format against the paper-based tests the students were familiar with. The final two questions were about timing, and the level of difficulty of the test itself.

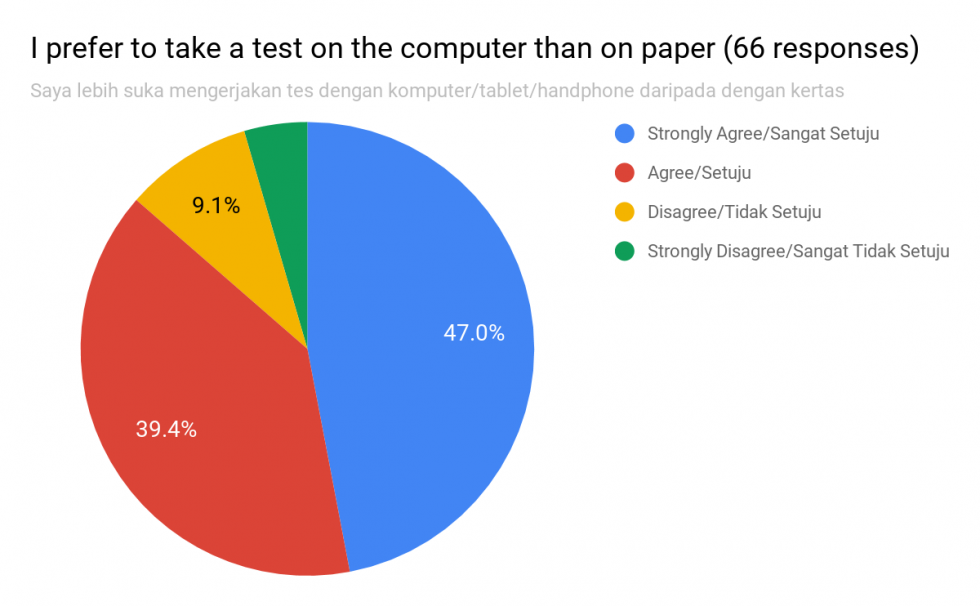

The good news was that feelings about the digital test were almost uniformly positive. In both tests, over 75% of candidates said they agreed or strongly agreed that they prefer to take a test on the computer rather than on paper.

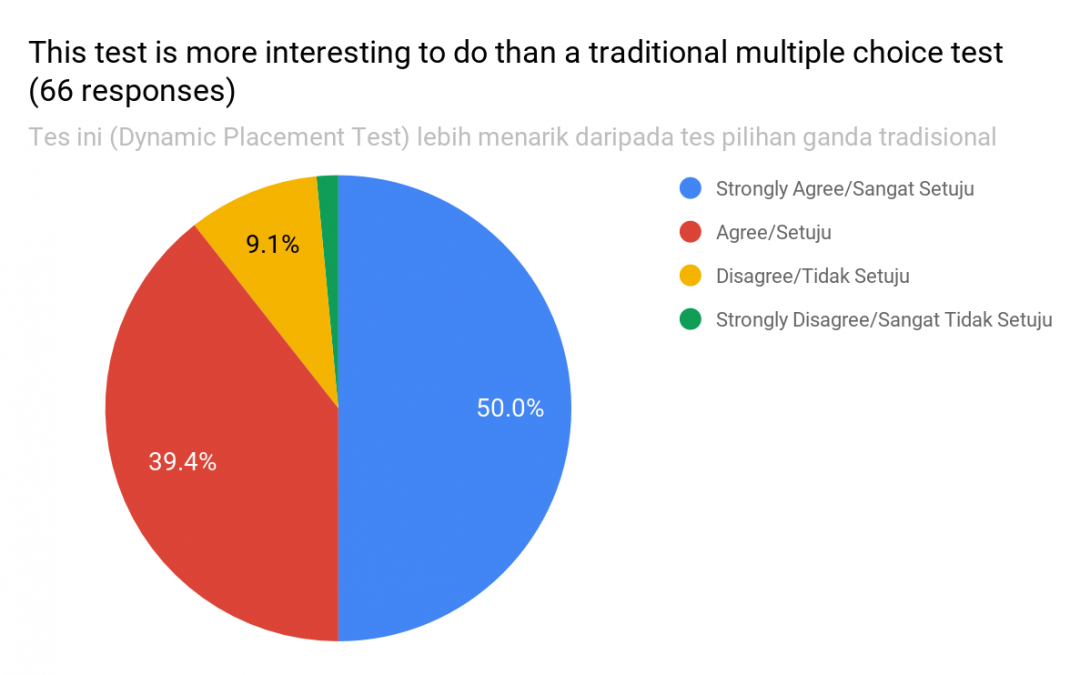

Consistent with this, and equally compelling, the findings showed that over 85% in both sessions found the test more interesting than the kind of traditional multiple choice test that they were used to.

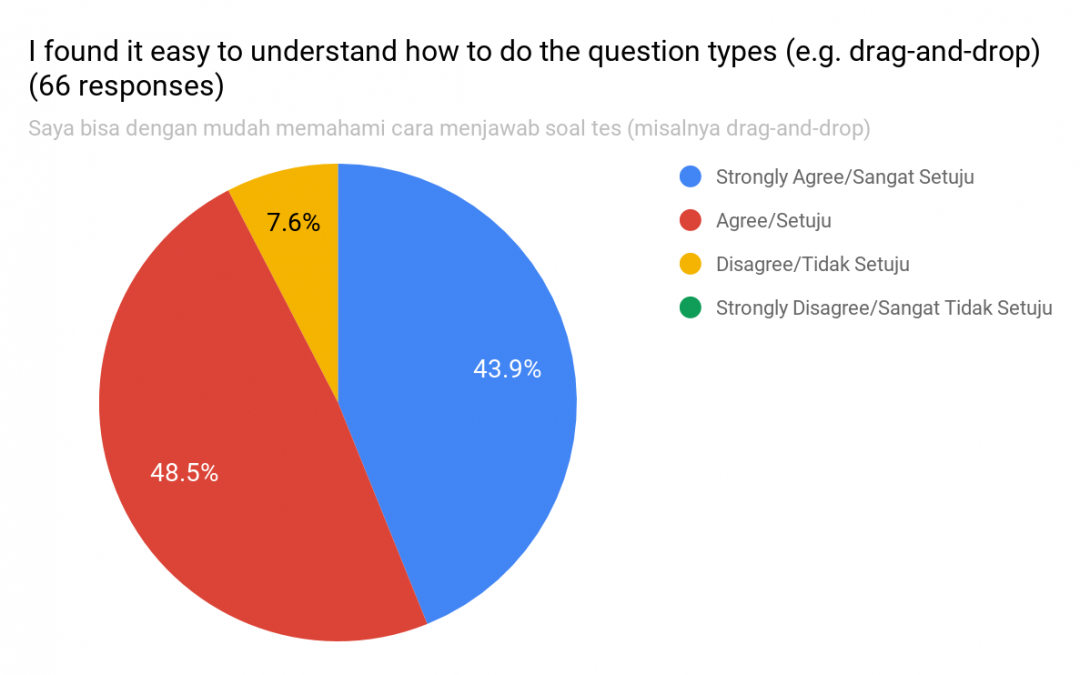

And an even higher percentage, 92.4% in session 1 and 93.9% in session 2, found the method of answering the test items (e.g. drag and drop) easy to understand.

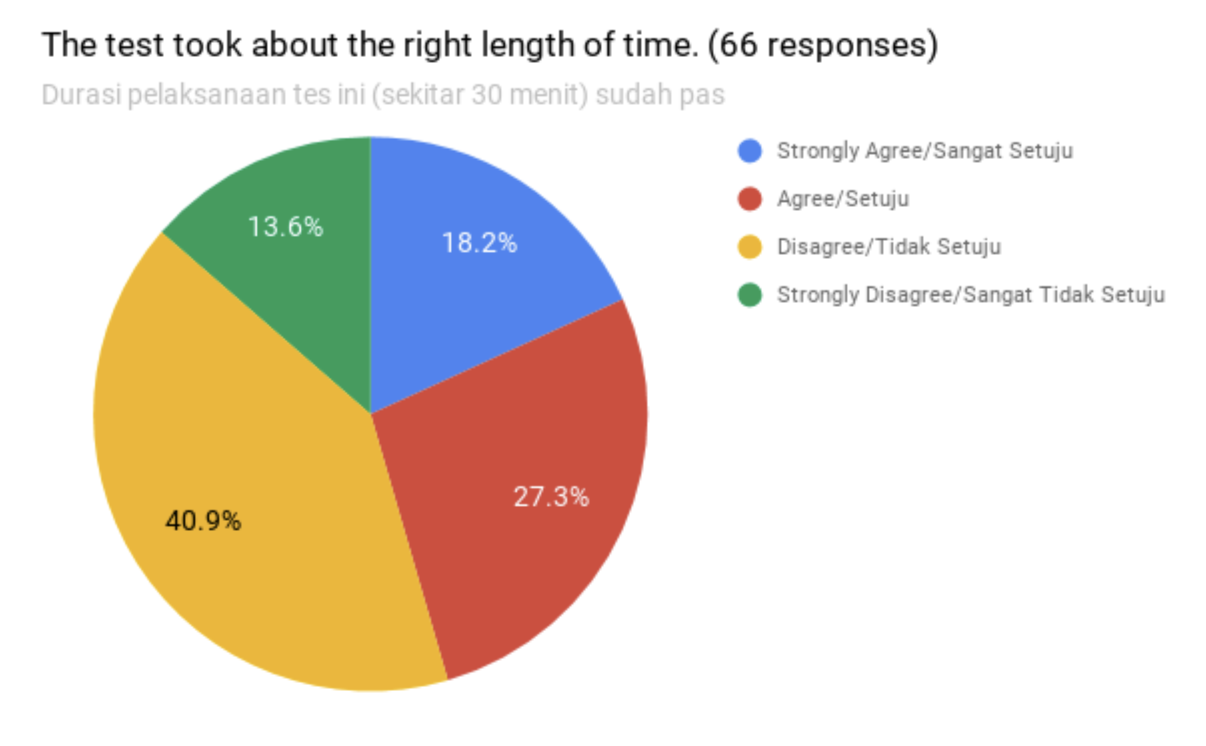

The last two questions proved equally interesting. Question 4 asked whether the test took about the right length of time. We might expect a couple of factors to be at play here. Firstly, is there a correlation between the result achieved and the attitude to the time available? We would certainly expect those who sail through it to be more satisfied with the time available than those who struggle. We did not measure that correlation, but it would be interesting to do so in the future.

Secondly, the number of questions a test taker is able to answer in the time available is itself a function of the test, and it is one element in measuring their language ability. (You would, of course, expect a C2 candidate to be able to answer more questions more quickly than a B1 candidate.) The other issue is that not having a fixed number of questions to answer within a defined timeframe runs against most test takers’ expectations: after all, we are taught that in IELTS Reading, for example, we will have to answer 40 questions in an hour, and that we therefore have 90 seconds for each question. We are therefore able to exactly measure our progress through the test. Adaptive tests do not work in the same way — and neither, in fact, does real-life application of language.

We would therefore expect a more even distribution of responses to this statement compared to the others, and that is what we got. The results changed between the two tests but around half thought the timing was about right whilst the other half would have preferred more time (we assume — although some, of course, may have wanted less):

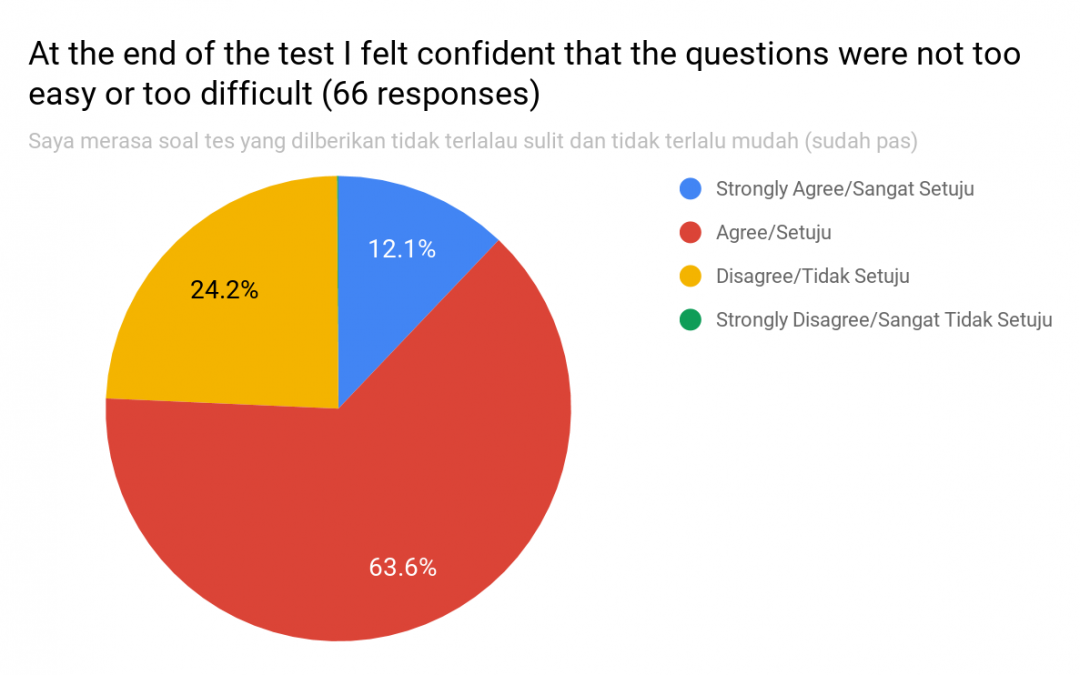

In general, this suggests that test takers found the test challenging, but it seems from responses to the final question that they did not find it too challenging. The statement was: “At the end of the test I felt confident that the questions were not too easy or too difficult.” As this is an adaptive test, we would worry if they found the test too easy (even C2 candidates should be stretched); if it is consistently too difficult, we are probably not learning very much about them. We were therefore pleased to see that over three quarters of respondents from both test sessions agreed with the statement and less than a quarter disagreeing.

Conclusion

The findings overwhelmingly support the hypothesis put forward by LBI’s Senior Director, Ibu Sisilia, and Vice Director, Ibu Memmy, that students prefer a digital test to a paper-based test. In addition, real life took a hand in confirming their second hypothesis: it is indeed more convenient to be able to take the test off-campus, including at home.

Further reading: